In this discussion we will learn about Smart Intelligent Agents In Artificial Intelligence, Smart Intelligent agent is a technology that is used to navigate what steps to take in a job through a number of data provided by the environment to act on information that has been set by the creators.

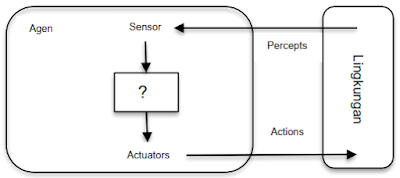

Agents and Environment

The Intelligent Agent System is a program that can be given tasks and can complete their tasks independently, and have intelligence. With the help of the agent system, then work that takes a long time can be completed by better and faster. With the intelligent agent in the application it is hoped that the application can think and be able to determine choice of the best move so that it can almost match the ability man.

See : What is artificial intelligence (AI)? Basic Concepts of Artificial Intelligence

Smart Agent

Intelligent agent is an agent is everything that can sense the environment through the sensor equipment, act in accordance with the environment and by using propulsion equipment / actuator (Russel and Norvig). An agent is a computer system that is in an environment and have the ability to act autonomously in situations the environment is in accordance with the designed objectives (Woldridge).

Abstraction of the Computational Model of an Agent

The figure shows an abstraction of the computational model of an agent. In the picture it can be seen that every action or activity that will be carried out by the agent is to fulfill or adapt to environmental conditions.

Internal Components of the BDI Agent Model

In Figure 2.2, shows the internal components of a BDI (belief-desire-intention) agent model that has:

- Events (sensory boosters),

- Beliefs (knowledge),

- Actions (actions),

- Goals (goals),

- Plans (agenda and plans).

Here are some examples of agents found in life: daily:

- Human Agents have eyes, ears, and similar organs as sensors. While the hands, feet, mouth and other body parts as an effector.

Example 1 on Effector of Human Agent

Example 2 on Effector of Human Agent

Example 3 on Effectore of Human Agent

- Agent Robot ASIMO has a camera and infrared as sensors, while the driving equipment as an effector.

Example Robot ASIMO

- Agent Robot Vacuum Cleaner can locate agents and dirty floor conditions as percepts. While as its actions are to the left (left), to the right (right), suction dust (suck), no any operation (noop).

- Agent Robot rice harvesting machine can find the agent's location and the condition of rice which knows whether the rice has been cut or not and these actions are percepts. As for the actions, namely turn right, left, forward, backward, separate rice and non-rice.

Harvesting Tools in the Ancient Era

- Agent Robot corn harvesting machine can find the location agent and condition of corn that has been cut or not truncated as percepts. While the actions are turning right, left, forward, backward, separating corn and not corn.

Old Corn Harvester Tool

Corn Harvester Today

- Agent Robot drone sprayer or fertilizer sprinkler can find the location of plants and areas that have and are not given fertilizer as percepts. While the actions fly, turn right, left, forward, backward and spray liquid fertilizer

Manual Spray Tool

Pest Spray Tool with Drone

- Agent Robot rice planter can find the location of the situation soft soil and hard soil as percepts. While the actions determine the depth of the seed, as well as the distance between plants.

Planting Rice in the Past

Modern Rice Planting

- Which solar-powered mouse pest repellent robot agent can be detect the presence of mice. While the actions make a sound that can disturb mice with a range and certain noise intensity.

Agents of the Ancient Rat Repellent Robot

Modern Rat Repellent Robot Agent

- Agent Software antarmuka pengguna grafis sebagai sensor dan sekaligus sebagai pengeraknya, misal pada aplikasi web, desktop maupun mobile.

Graphical User Interface Software Agent

Agent Structure

The agent function (f) maps of percept (P*) history for actions (A)

Agent Structure

The task of studying artificial intelligence is to create an agent machine. An agent also needs architecture can be a computer only, or a computer that has certain hardware can do a certain job such as processing camera images or filtering sound input. So, an architecture makes impressions (percepts) of the environment can be well received by the sensors it has, then it can berun its agent program and can take action to the environment using actuators. The agent, architecture, and program relationships can be assumed as follows:

agent = architecture (hardware) + program (software)

Rationality

An agent always tries to optimize a the value of the performance measure called the agent has a rational agents). An agent is rational if it can choose the possibilities to act the best at all times, according to what he knows according to the environmental conditions at that time. For each row possible perceptions, a rational agent should choose one actions that are expected to maximize the measure of its ability, with the evidence provided by the perceptual array of whatever knowledge the agent has.Thus, rational agents are expected to do or give right action.The right action is the one that causes the agent to reach the most successful level (Stuart Russel, Peter Norvig, 2003).

Performance measures (of rational agents) are usually defined by the agent designer and reflect what is expected can be done from the agent, for example fitness value, fastest time, shortest distance, more accurate results. An agent based rational is also called an intelligent agent. From this perspective, the Artificial intelligence can be viewed as the study of the principles and designs of artificial rational agents. There are several types of behavior from Agents, including:

- Rational agents: agents who do the right thing.

- Performance measure: how successful an agent is.

- A standard is needed to measure performance, by observing the conditions that occur.

As for the example of Agent behavior, namely:

• Agent (vacum cleaner), Goal to clean the dirty floor. Performance of the vacuum cleaner:

- Amount of Dirt cleaned

- Total electricity consumption

- Noise Amount

- Time required

Change agents (students), Goal Measure is Graduating College on time, Work in Big Companies, Create Big Companies (So Entrepreneur), Rich / Adequate, Married. While the Performance is GPA, Monthly Income / Salary Before creating an agent, you should know well:

- All possible impressions (Percepts) and actions (Actions) that can be received and carried out by agents.

- What is the goal (Goal) or measure of the ability (Performance) of the agent to be achieved.

- What kind of environment will it be operated by agents.

Rationality vs Omniscience

- Rationality: knowing the expected outcome of a action and do it right.

- Omniscience: impossibility in reality.

PEAS

PEAS stands for Performance measures (performance measures), Environment (environment), Actuators (actuators), Sensors (sensors). Here are some examples from PEAS:

1. “smart Car/taxi” agents:

- Performance measure: safe, fast, through the road / path correct, obeying traffic, comfortable when driving, right fare

- Environment: roads, traffic, pedestrians, customers

- Actuators: steering wheel, accelerator, brakes, turn signal left/right, horn

- Sensors: camera, sonar, speedometer, GPS, meter for fuel, engine sensors (obstacle detection), keyboard or in the form of a touch screen

2. Agents of the “Health Diagnosis System” expert system:

- Performance measure: patient health, low cost affordable, there are institutions that overshadow and are legal in terms of law (Dinkes, BPJS, etc.)

- Environment: patient, hospital/clinic/health center, staff

- Actuators: monitor screen (provide questions, diagnoses, care, direction)

- Sensors: Keyboard (symptom entry, input rating result identification of early examination of symptoms, entry of answers patient)“

3. "Robot separator and garbage picker” agent:- Performance measure: percentage level of correctness of parts taken is really trash, or certain trash that managed to get it right

- Environment: the machine is running with the top in the form of piles of items in the form of garbage or other, a pile of trash

- Actuators: arm and hand assembly assembly as robot joint

- Sensors: camera, angle sensors at the joints of the robot

4. “Interactive English tutor” agent:

- Performance measure: Maximize TOEFL test scores or other

- Environment: private, or regular class that contains a or student group

- Actuators: monitor screen (practices, explanations and suggestions, as corrections and answer solutions)

- Sensors: Keyboard

PEAS Characteristics

An agent has characteristics that describe the ability of the agent itself. The more characteristics that

owned by an agent, the smarter the agent will be.

There are several characteristics of the agent:

- Autonomous: the ability to perform tasks and make decisions independently without intervention from outside such as other agents, humans or other entities.

- Reactive: the ability of agents to quickly adapt to changes in information in the environment.

- Proactive: goal-oriented ability with How to always take the initiative to achieve goals.

- Flexibility: agents must have multiple ways of reach its goal.

- Robust: the agent must be able to return to its original state (tough) if it fails in terms of action or in carrying out the plan.

- Rationale: the ability to act in accordance with tasks and knowledge by not doing things that can lead to conflict of action.

- Social: in carrying out their duties, agents have ability to communicate and coordinate well with humans or with other agents.

- Situated: the agent must be up and running in a certain environment.

Agent Architecture

In the black box concept, the agent receives input (percepts) from the outside and then process it so that it can be generated output (action) based on the input. Brenner find a model for this process that contains the stages of interaction, information fusion (fusion, unification), information processing and action. BDI (belief), desire (desire), and will (intention)):

• Trust or Belief:

- What agents know and don't know about their environment.

- Or belief is agent knowledge or information obtained by agents about their environment.

• Desire or desire:

- Goals, tasks to be completed by agents or something what the agent wants to achieve.

• Intention or intention:

- Plans are drawn up to achieve goals.

- An example of an agent architecture is the design of an agent system smart for monitoring company stock (Percept and Action). The inputs and actions are:

- Percept. Percept used in the intermediate system other data on new suppliers, data on selling goods, data on purchased goods, and goods data arrived.

- Actions. The action involved is to compare the prices of suppliers' goods, compare the time between goods, determine suppliers, determine inventory.

Type or Type of Agent

For intelligent agent creation, there are five types of agents that can be implements the mapping from impressions received to action to be taken. There are several types of agents, including:

1. Simple Reflex Agents

Simple reflex agents are the most simple because he only applied the condition-action technique. So,

If certain conditions occur, the agent will automatically simple gives a certain action. Agent example for taxi drivers are given the condition "if the car in front does" braking” then the agent will give a “step on the brake” action.

Structure of Simple Reflex Agents

2. Model-Based Reflex Agents

Simple reflex agents can perform their actions by good if the environment that gives the impression does not change. For example, in the case of a taxi driver agent, the agent only can receive the impression of a car with the latest model only. If there is a car with an old model, the agent can't

accept the impression so that the agent does not do braking action. In this case, a reflex agent is needed

model-based that can continuously track the environment so that the environment can be impressed well. This agent will add a model about the world that is knowledge of how the world works. So, agent

This model-based reflex keeps the state of the world using the internal model then choosing an action like simple reflex agent. For example, a taxi driver agent, can only receive the impression of a car with the latest model only. If there are car with an old model, the agent can't accept so that it does not take braking action.

Model-Based Reflex Agents

3. Target Based Agent

The agent's knowledge of the overall state of environment is not always enough. A certain agent must

given information about the goal which is a state that the agent wants to achieve. Thus, the agent will continue to work continuously until you reach your goal. Search and planning are two sets of work done to achieve agent goals. This goal-based reflex agent adds information about the destination.

Goal-Based Agents

4. Utility-Based Agents

Achievement of goals on agents is not enough to generate agents with high-quality behavior. For example for taxi driver agent, there are several actions that can be taken by agents so that they can reach their destination, but there are faster, safer, or cheaper than others. Goal-based reflex agents do not discriminate between states that good with bad circumstances for agents.

On reflex agents This utility-based thinking of good conditions for agents so that agents can do their job much better. Although for certain cases, it is impossible for the agent to do it all at once. For example, for taxi drivers, to go to a destination faster, that against safer conditions. Due to travel faster taxi, of course the level of danger is higher than relaxing taxi ride.

Utility-Based Agents

5. Learning agents

Learning agents learn from experience, improve performance responsible for making improvements Performance elements is responsible for selecting the Critic's external actions provide feedback on how the agent is working.

Learning Agents

Environment Type

There are several types for the type of environment in PEAS, including other:

1. Fully observable – partially observable

If sensors on an agent can access all conditions in the environment, then the environment can be said to be fully observable to the agent. More effectively the environment is said to be fully observable if the sensor can detect all aspects that are related to the choice of action to be taken.

A fully observable environment is usually very makes it easier, because agents do not need to take care of internal conditions to keep track of the state of the environment. An environment can become partially observable due to interference and sensor inaccuracy or because there are parts of the missing from sensor data.

2. Deterministic – stochastic

When the state of the environment further fully depends on the current situation as well as future actions carried out by the agent, then the environment is deterministic. While stochastic is the opposite of deterministic, where the next state does not depend on the present state and also the actions to be taken by the agent.

If the environment is deterministic except for action of the agent, then the environment is strategic.

The game of Reversi is deterministic because of the next state depending on the current state (when taking the step).

3. Episodic – sequential

For environments that are episodic, the agent's experience divided into several short episodes. Each episode consists of of what the agent feels and then takes one action certain. The quality of the agent's actions only depends on the episode that's all, because the next action doesn't depend on the action

what will be done in the previous episode.

The episodic environment is simpler because the agent is not necessary think about the next steps. Whereas in a sequential environment, the current action can be influence subsequent actions. Game Reversi is sequential because the agent thinks for the next steps and all the steps an agent will take are interdependent.

4. Static – dynamic

If the environment can change while the agent is taking decisions, then the environment is dynamic, vice versa static. A static environment is easier to deal with because the agent does not need to pay attention to his surroundings when he is taking action, as well as time is running.

If the environment does not change over time, however causes the value of the agent's ability to change, then the environment it is semidynamic. Reversi game is static because when the agent takes action, the environment does not change and also does not affect the value of the agent's ability.

5. Discrete – continuous

When impressions and actions will be accepted and carried out by the agent has been clearly defined, then the environment is discrete. Chess is discrete, because the steps taken is limited and certain.

While taxi drivers are continuous, because speed and location on the taxi for a certain period has a constantly changing value. Reversi Game is discrete because all impressions and actions are clear set according to the rules of the game Reversi

6. Single agent – multi agent

The crossword puzzle game solving agent is at single agent environment. Chess player agent is at multiagent environment.

There is something else that gives differences in the agent environment, i.e. whether the agent provides assistance to other agents or whether agents will maximize its ability depends on the behavior of other agents. Reversi Game are multi-agent because they think about the steps to be taken by the opponent.

Posting Komentar untuk "Smart Intelligent Agents In Artificial Intelligence"